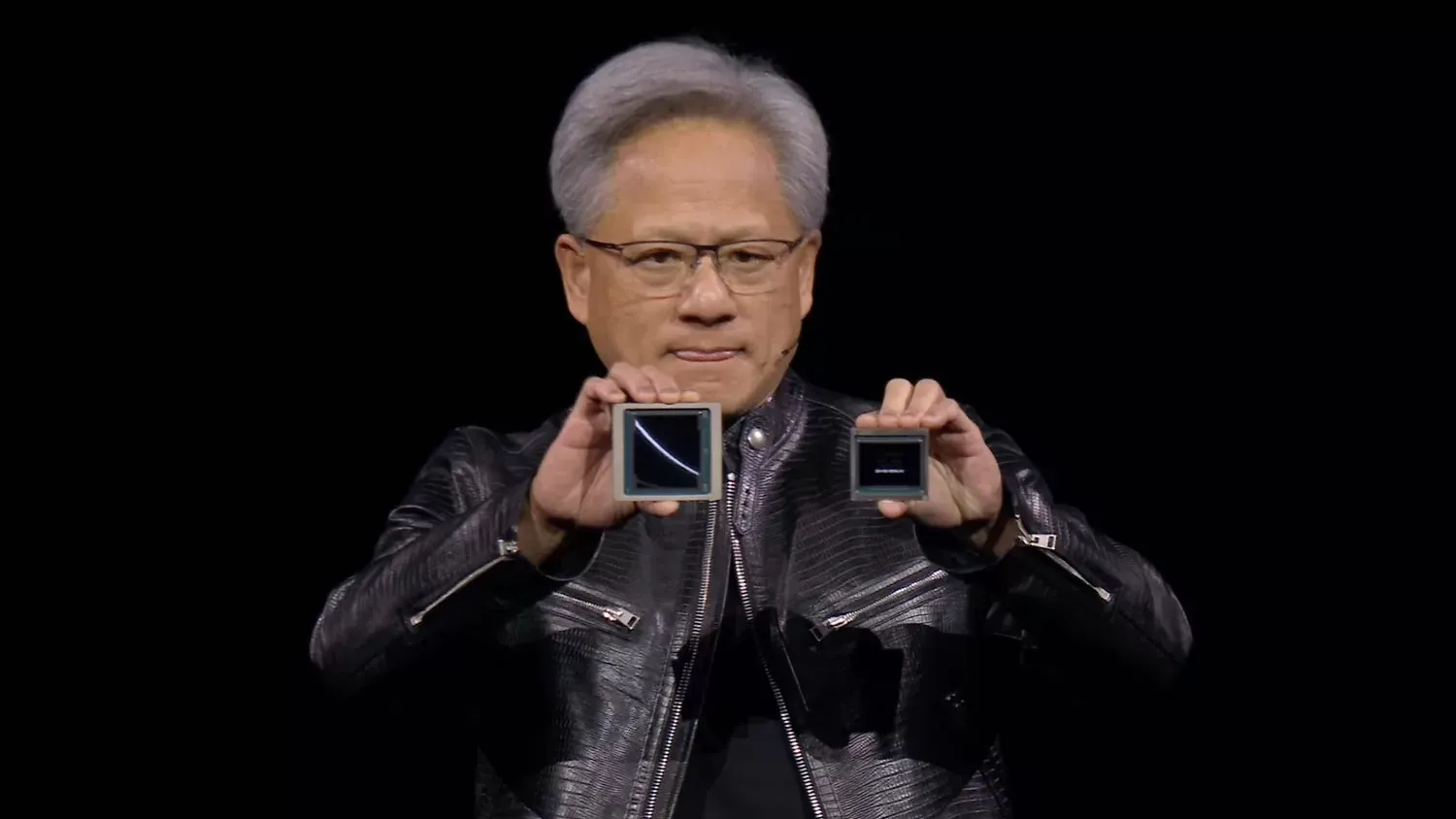

Nvidia announced two GPUs designed especially for artificial intelligence, the new Blackwell B200 and the GB200 "superchip". These chips offer revolutionary performance while reducing power consumption at the same time.

‘We Created a Processor for the Generative AI Era,’ NVIDIA CEO Says | NVIDIA Blog

Kicking off the biggest GTC conference yet, NVIDIA founder and CEO Jensen Huang unveils NVIDIA Blackwell, NIM microservices, Omniverse Cloud APIs and more.

In this post, I have collected the key points about these new GPUs:

- The B200 GPU will have up to 20 petaflops of performance in FP4 and 208 billion transistors

- One B200 GPU will have 192GB of memory with a bandwidth of 8 TB/s – ideal for LLM models that require a lot of memory and high bandwidth

- The GB200 "superchip" combines two B200 GPUs with one Grace CPU on an ARM architecture. Nvidia says this is 30 times more productive for inference LLM models in FP4 compared to the H100

- The advent of the second generation of the Transformer Engine, which doubles the computing power, bandwidth and size of the model

- The emergence of support for a new format – FP6, the golden mean in speed and accuracy between FP4 and FP8

- To train GPT-4 in 90 days, it took 8,000 old chips and 15 megawatts of power. New GPUs will only need 2000 units and 4 megawatts; that is, GPT-5 is just around the corner. Performance is promised at an insane 20 petaflops. By the way, the PS5 has only 10.3 teraflops – 2200 times less

Prices for new graphics cards are unknown. But it is already clear that Amazon, Google, Microsoft and Oracle will be the first to receive them. It is also known that Amazon is already planning a 20,000 GB200 cluster.