ChatGPT is currently one of the best chatbots on the market. However, its use has several disadvantages. For example, all requests to the GPT model are processed on third-party servers. This greatly limits the use of this neural network for sensitive data. Therefore, open source LLMs, which imply open source code and run on a local computer, have begun to receive special development.

Enthusiasts believe that the time will come when every user will be able to run personal assistants on local computers and even phones. Moreover, this can be done easily, and the quality of their work will be comparable to the quality of ChatGPT.

Unfortunately, we still have to live to see such times – modern open source LLMs are much inferior to GPT 4 models. However, it is worth noting that they have been developing greatly in recent years. Moreover, many of them are not demanding on resources and can be run on regular PCs or even laptops.

Until recently, running an open source LLM on a local device was only available to people who knew at least a little about programming. But the Jan project was launched, which allows you to run almost any open source model on a personal computer without any programming skills. In this article, we will look at how to use Jan for the average user.

What is Jan?

Jan is an open source application that allows you to run almost any LLM in a beautiful interface. All you need to do is download the Jan application, load the LLM weights and start communicating with the chatbot through a dialogue like in ChatGPT.

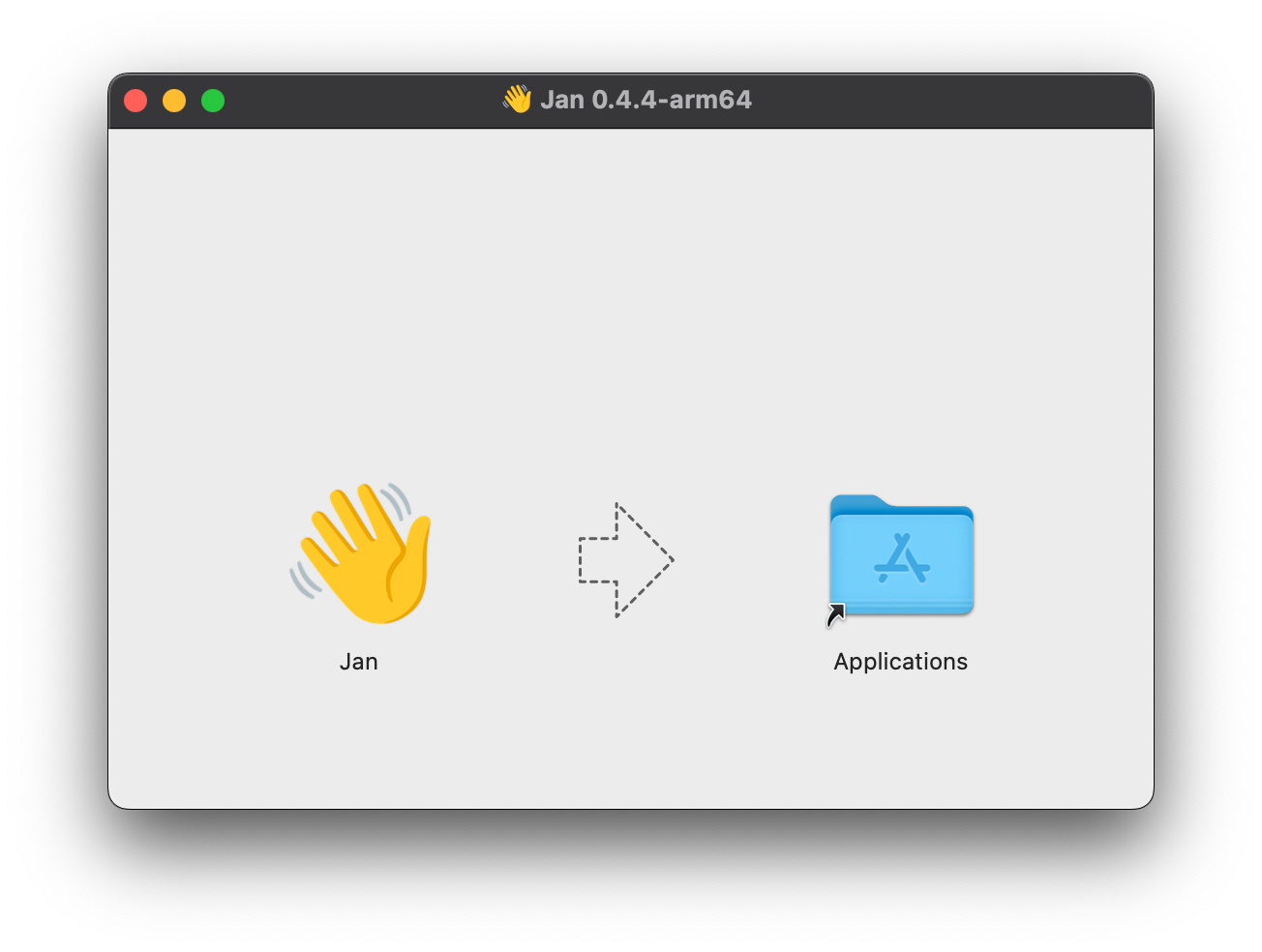

Jan installation

Jan is available for Mac (M1, M2, M3 and Intel), Windows and Linux computers. If your computer has Apple Silicon or is equipped with a graphics card, this will significantly speed up the work of your personal assistant. You can download the application from the official website of the project. Installing Jan is no different from the standard installation of any ordinary program on your OS.

Download LLM

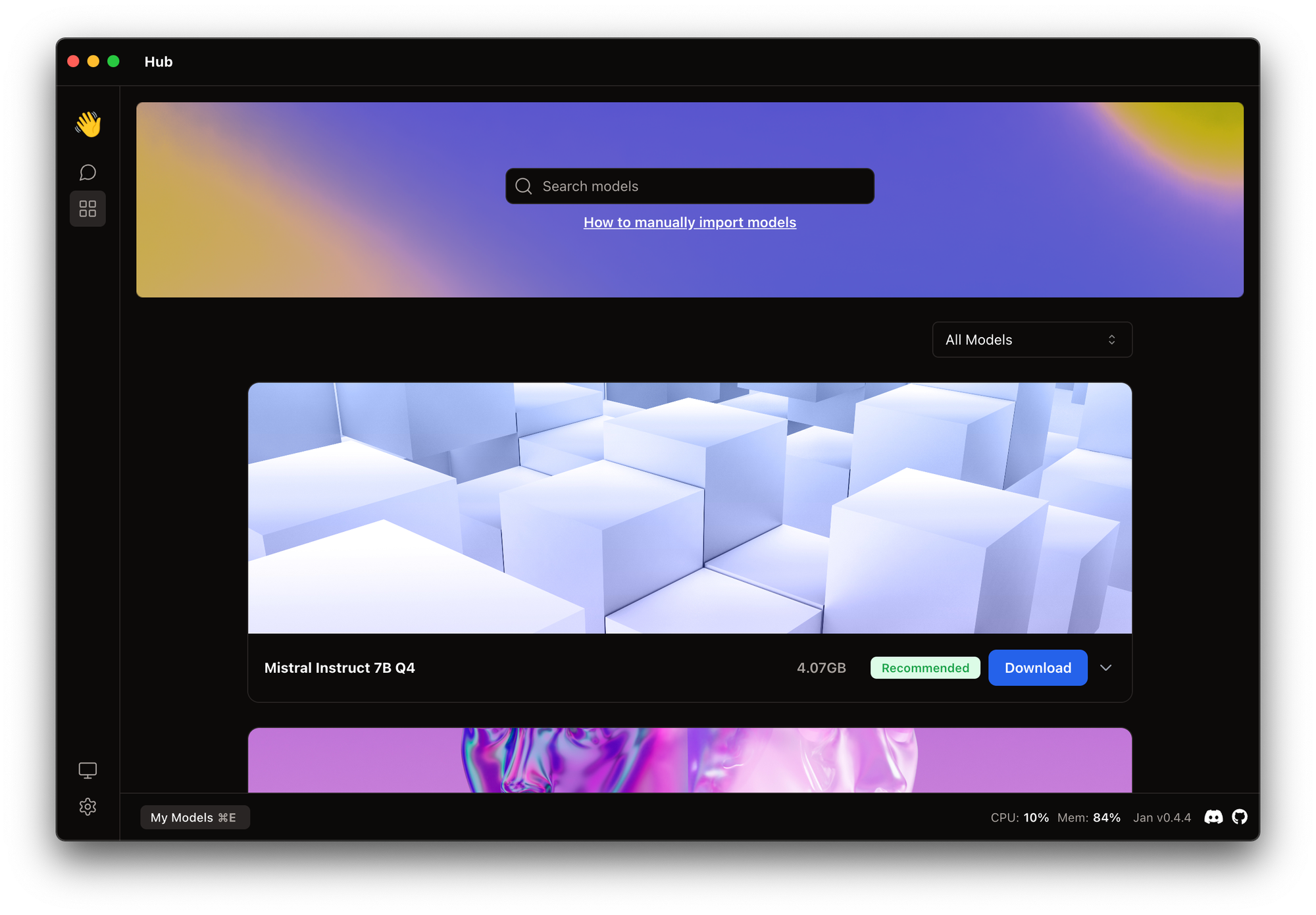

Before you start working with the chatbot, you need to load the LLM weights. For convenience, Jan has created a separate Hub section, with which you can download any model with the click of a button. Among those models that you can download from the Hub are the famous Mistral and Llama 2. More advanced users can manually import any LLM from HuggingFace.

Start chat

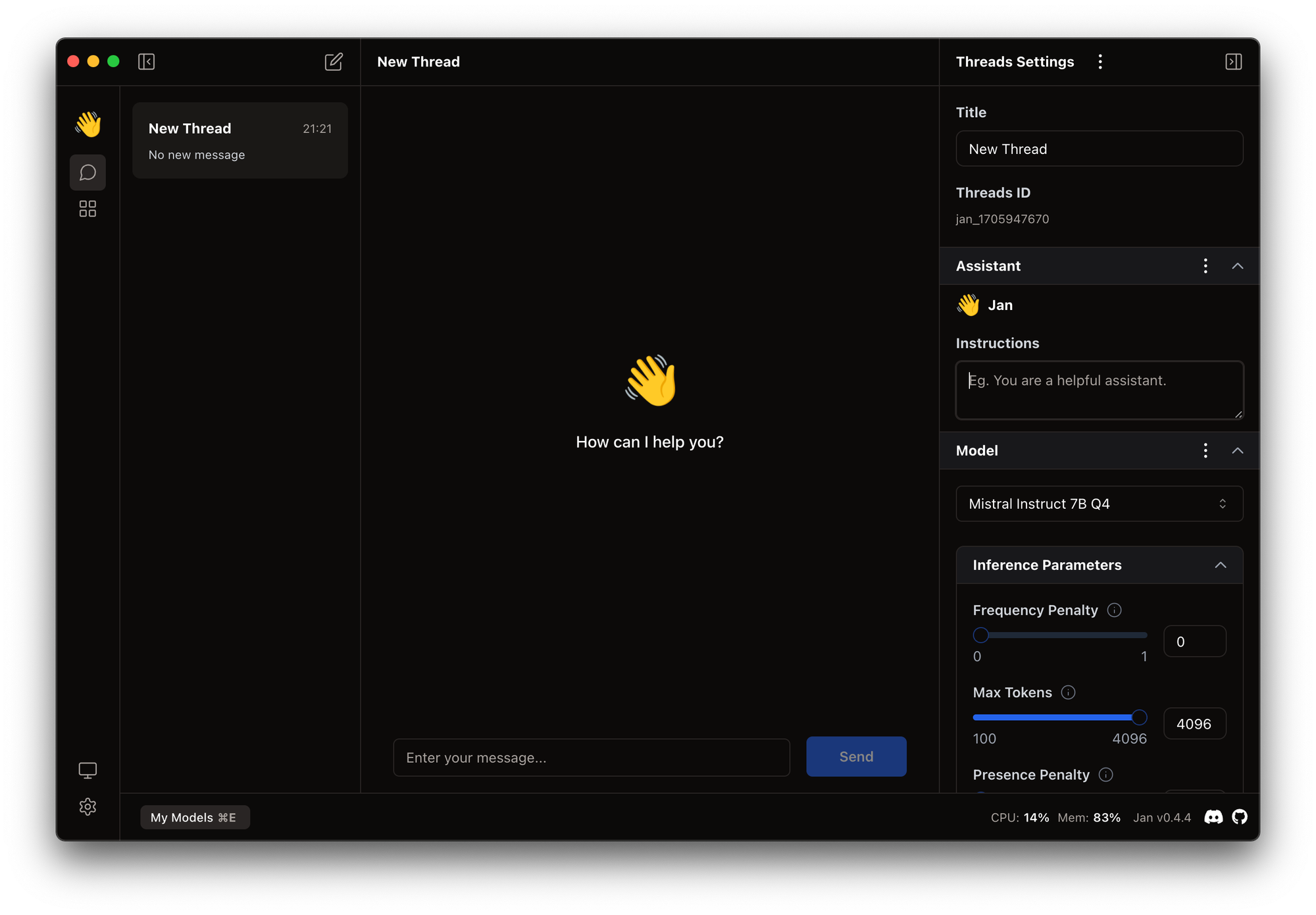

After you have specified the weights of the neural network, you can begin communicating with your personal assistant. Go to the Threads tab, and you will see the familiar dialog start screen. On the right side of the interface you can find various settings for the chat (chat name, assistant name, etc.), as well as parameters for the LLM itself. Make sure that the Model value matches the model you downloaded in the previous step.

To start a dialogue with the neural network, enter and send it any message (for example, a greeting), as in ChatGPT. When you first start it, your computer will take some time to load the model. All subsequent responses will be generated much faster.

API

Among other things, Jan allows you to run a web server with an OpenAI-like API, which can be useful when used in other programs. Another interesting use of Jan is an interface for interacting with GPT 4. When creating a chat, you can select GPT 4 as a model and register your API token, and communicate with the OpenAI model through Jan. This can be convenient if you don't want to keep the ChatGPT tab open in your browser all the time.

Conclusion

Jan is a convenient and beautiful tool that allows you to run LLM on your local machine without any complications. Installing this application is no different from installing any other program. And a special Hub with models simplifies the installation of the LLMs themselves. This program can be useful for professionals, as it greatly simplifies many processes, and also allows ordinary users to easily get acquainted with the world of open source LLM.

We are waiting for Jan to appear on mobile devices!