Many research papers appear every day. This trend is especially noticeable for popular topics in recent years, such as Computer Vision and Natural Language Processing. As a Computer Vision researcher, I need to read several research papers related to my scientific interests every day to stay updated with the latest trends.

In this regard, I (and I am sure my colleagues) had a need to speed up the process of selecting good scientific papers and reading them. The past year has been “the year of LLM and LMM”. Indeed, almost the whole world has learned what ChatGPT is. So I decided to test the OpenAI's neural network as a research assistant. Already, GPTs, announced last year, can help you summarize research papers for your initial review.

GPT selection

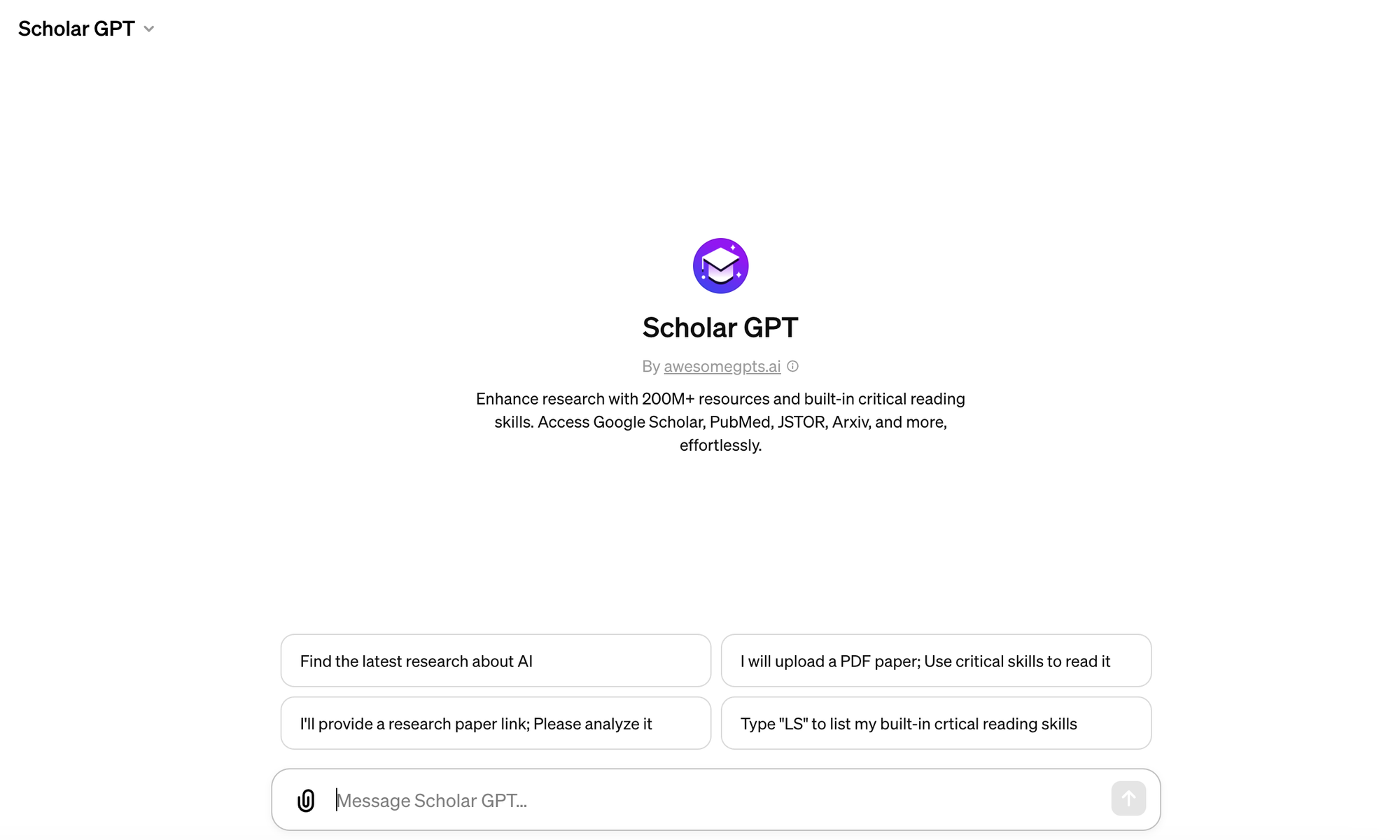

There are many GPTs that make it possible to automate the writing of a summary for a research paper. Empirically, I chose the best Scholar GPT by awesomegpts.ai for myself.

Prompt engineering

The next equally important stage is creating a prompt that will allow us to achieve the desired result. But before that, we need to decide what exactly we want to get from the chatbot. Personally, I have determined that I expect the neural network to provide a summary of the main problems and ideas raised in the paper. Thus, based on the automatically generated review, I plan to draw conclusions whether I should read this work myself or not. Thus, I am filtering out many works that are irrelevant to me.

To achieve this goal, I compiled the following prompt, which in most cases helped me get the answers I wanted and with the most specificity:

Please stick to this plan while writing the review:

1. Title, authors

2. Problem statement

3. A short description of the proposed method

4. Paper's novelty, contribution

6. Evaluation:

- datasets for training and evaluation

- metrics for evaluation

- conclusions from the comparison with reference to the tables and the figures

- ablation study ideas and results

7. Advantages and shortcomings

8. (optional) Usefulness for production

9. Find additional information about this paper: project page, GitHub repo, and Hugging Face demo

Using HTML instead PDF

Sometimes the chatbot may have difficulty parsing a PDF document. Tables and images can especially cause parsing problems. For example, in Computer Vision papers, schemes and results in tables usually need to be analyzed to complete the review. Therefore, one of the most effective ways to solve this problem is to convert a PDF document to HTML. After conversion, you can send a link to an HTML version of a paper (or attach an HTML file) to a chat with Scholar GPT instead of a PDF.

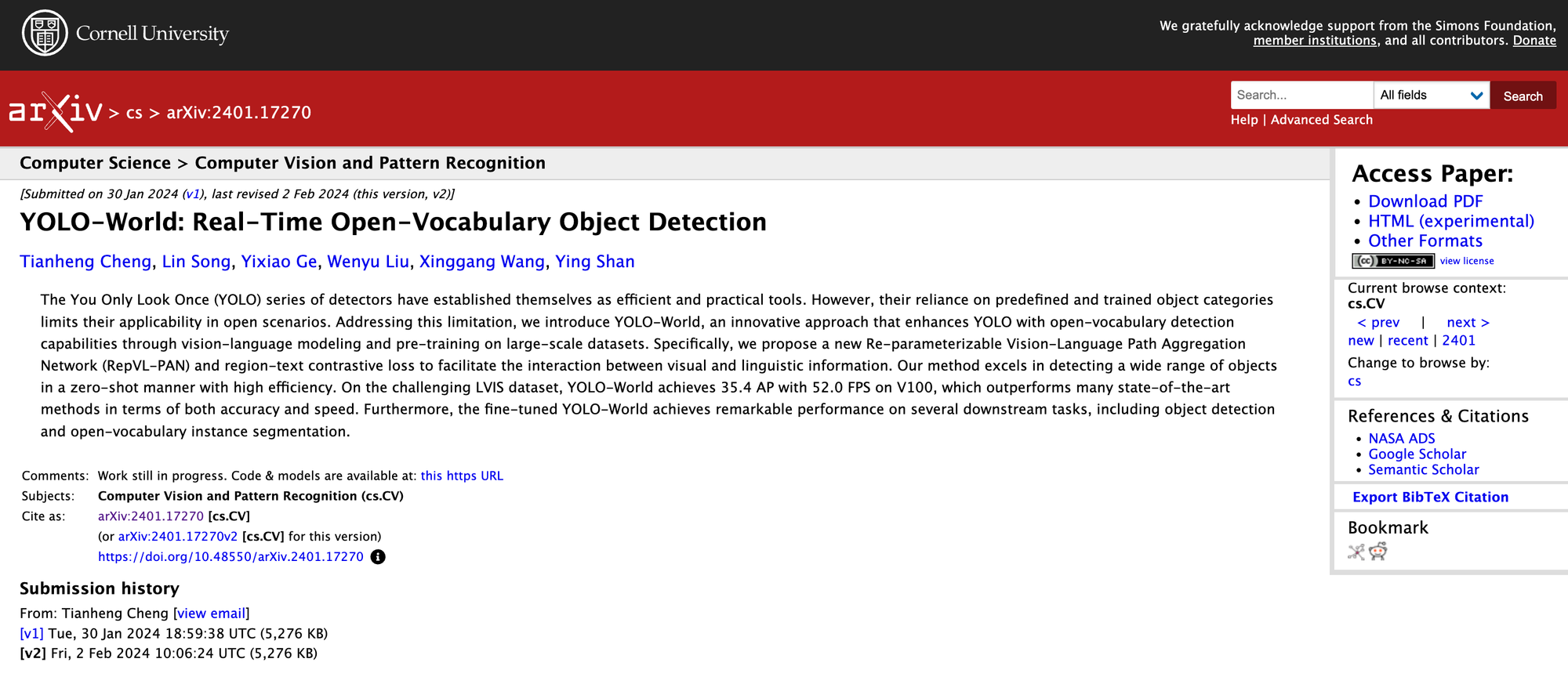

arXiv

Recently, a PDF to HTML conversion feature has been added to arXiv. However, this feature is only available for some papers. If you see the "HTML (experimental)" button on the paper page on the arXiv website, then you can view and download the HTML version of this work.

ar5iv

Another approach to converting almost any PDF document from arXiv to HTML is to use the ar5iv service. You need to replace X with 5 in the paper URL to view this paper in HTML format: for example, arxiv.org/abs/1910.06709 turns into ar5iv.org/abs/1910.06709.

Enthusiasts can run the ar5iv web service on their own server using open source code from GitHub

Example

I tested the proposed approach for automating the summarization of scientific papers on a variety of works from arXiv. In general, I would rate the result as digestible for initial familiarization with scientific works for subsequent assessment of their suitability for more detailed reading.

Reviews written by ChatGPT are most often complete and correct, but sometimes the model's conclusions are too superficial or may contain some inaccuracies. But in any case, it's better than nothing. Even in this way, I believe that ChatGPT can already make the work of researchers like me easier.

My prompt

Write a review of the paper at the link https://arxiv.org/pdf/2010.11929.pdf.

Please stick to this plan while writing the review:

1. Title, authors

2. Problem statement

3. A short description of the proposed method

4. Paper's novelty, contribution

6. Evaluation:

- datasets for training and evaluation

- metrics for evaluation

- conclusions from the comparison with reference to the tables and the figures

- ablation study ideas and results

7. Advantages and shortcomings

8. (optional) Usefulness for production

9. Find additional information about this paper: project page, GitHub repo, and Hugging Face demo

ChatGPT's summary

Review of "An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale"

Title and Authors: "An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale" by Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, Neil Houlsby (Google Research, Brain Team).

Problem Statement: The paper addresses the challenge of applying the Transformer architecture, which has seen great success in natural language processing (NLP), directly to the domain of computer vision for image recognition tasks without relying on convolutional neural networks (CNNs).

Proposed Method: The authors introduce the Vision Transformer (ViT), which applies a pure transformer directly to sequences of image patches (treated as "words") for classification tasks. The method involves splitting an image into fixed-size patches, linearly embedding each of them, adding position embeddings, and then processing the sequence of embeddings through a standard Transformer architecture.

Novelty and Contribution: The novelty of ViT lies in its departure from CNNs, demonstrating that a Transformer can directly learn from raw images divided into patches. The key contributions include showing that Transformers can achieve excellent results on image recognition tasks, especially when pre-trained on large datasets, and providing a scalable model that can be adapted to various image sizes and complexities with fewer computational resources than state-of-the-art CNNs.

Evaluation:

- Datasets for Training and Evaluation: The model was evaluated using standard benchmarks including ImageNet, CIFAR-100, and the VTAB suite of 19 tasks. It was pre-trained on large datasets (ImageNet-21k and JFT-300M).

- Metrics for Evaluation: Top-1 accuracy, comparison with state-of-the-art models, and computational efficiency were the primary evaluation metrics.

- Conclusions from Comparison with Reference to Tables: ViT outperforms or is competitive with state-of-the-art CNNs across most benchmarks, particularly when pre-trained on large datasets. For instance, the best ViT model achieves 88.55% accuracy on ImageNet, surpassing the previous state-of-the-art. Tables and figures in the paper (e.g., Table 2 and Figure 2) extensively document these findings.

- Ablation Study Ideas and Results: The paper includes ablation studies on aspects like the importance of the sequence length, the size of the patches, and the impact of different types of position embeddings. These studies demonstrate the robustness of ViT's architecture and the importance of scale in training data.

Advantages and Shortcomings:

- Advantages: ViT introduces a novel approach to image recognition that leverages the power of Transformers without CNNs, demonstrating scalability and adaptability across different datasets and tasks with notable computational efficiency.

- Shortcomings: ViT requires large-scale pre-training to outperform CNNs, which may not be feasible in all scenarios. Additionally, the lack of inherent inductive biases such as translation invariance and locality in Transformers compared to CNNs could limit its effectiveness on smaller datasets without substantial pre-training.

Usefulness for Production: ViT's scalability and efficiency in leveraging large datasets make it highly promising for production environments, especially where large-scale image recognition tasks are critical. Its adaptability to different image resolutions and tasks without significant architectural changes offers flexibility in deployment.

Additional Information:

- Project Page: Not explicitly mentioned but typically would be hosted on the Google Research website or a dedicated project page.

- GitHub Repo: The pre-trained models and fine-tuning code are available at https://github.com/google-research/vision_transformer.

- Hugging Face Demo: Not mentioned in the paper, but Hugging Face often hosts models for easy use and experimentation by the community, so it's worth checking their platform for a potential demo.